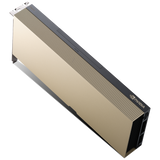

NVIDIA H200 NVL Tensor Core GPU 141GB HBM3e PCIe Gen 5.0 – Hopper Architecture AI Accelerator

NVIDIA H200 NVL Tensor Core GPU 141GB HBM3e PCIe Gen 5.0

High-Performance AI Accelerator for HPC & LLM Workloads - PNs: 900-21010-0040-000, 699-21010-0230-B00

The NVIDIA H200 NVL Tensor Core GPU sets a new benchmark in data center performance, built on the Hopper architecture and optimized for AI, HPC, and generative workloads. Featuring 141GB of ultra-fast HBM3e memory and PCIe Gen 5.0 interface, the H200 delivers exceptional throughput for training and inference of large language models (LLMs), deep learning, and scientific computing.

With Tensor Core technology, NVLink scalability, and advanced Multi-Instance GPU (MIG) support, the H200 NVL achieves unprecedented acceleration across AI pipelines — from model training to real-time inference. Designed for modern AI clusters and hyperscale data centers, it offers massive memory bandwidth, energy efficiency, and compatibility with NVIDIA’s full software stack, including CUDA, cuDNN, and TensorRT.

The H200 NVL PCIe is a drop-in replacement for the A100 and H100 GPUs, ensuring smooth transition and enhanced performance across existing infrastructures.

⚙️ Product Specifications: NVIDIA H200 NVL PCIe GPU

CLICK HERE FOR SPECIFICATIONS SHEET

| Category | Details |

|---|---|

| Model | NVIDIA H200 NVL Tensor Core GPU |

| Part Numbers | 900-21010-0040-000, 699-21010-0230-B00 |

| GPU Architecture | NVIDIA Hopper |

| CUDA Cores | 14,592 |

| Tensor Cores | 456 (4th Gen) |

| Memory | 141GB HBM3e |

| Memory Bandwidth | 4.8 TB/s |

| Interconnect | PCIe Gen 5.0 x16 |

| NVLink Support | Yes – up to 900 GB/s GPU-GPU bandwidth |

| MIG Support | Up to 7 GPU instances |

| Performance | FP64: 34 TFLOPS / FP32: 68 TFLOPS / FP8: up to 1,979 TFLOPS |

| Form Factor | PCIe Dual Slot |

| Cooling | Passive (requires chassis airflow) |

| Power Consumption (TDP) | 700W |

| System Compatibility | NVIDIA-certified servers and AI platforms |

| Software Stack | CUDA, cuDNN, TensorRT, NCCL, NVLink, NVIDIA AI Enterprise |

| Use Cases | AI training, LLMs, HPC simulations, inference, cloud computing |

| Compliance | RoHS, WEEE, CE, FCC, UL certified |

| Warranty | Standard manufacturer warranty (varies by reseller) |

❓ Frequently Asked Questions (FAQs)

Q1: What is the main difference between the NVIDIA H200 and H100 GPUs?

A1: The H200 introduces next-generation HBM3e memory (141GB) offering up to 1.8× higher bandwidth, significantly improving large-scale AI model training and inference performance.

Q2: What workloads benefit most from the H200 NVL?

A2: It’s ideal for AI training, inference, LLMs (like GPT-4 scale models), scientific simulations, and high-performance data analytics.

Q3: Can the H200 NVL be used in existing A100 or H100 systems?

A3: Yes - the H200 PCIe maintains backward compatibility, allowing seamless upgrades from A100 or H100 environments.

Q4: Does the H200 NVL require special cooling?

A4: Yes, it features passive cooling and should be installed in a server with sufficient airflow.

Q5: What’s the total memory advantage of the H200 NVL?

A5: With 141GB of HBM3e, it provides the largest memory capacity of any NVIDIA PCIe GPU to date, perfect for training extremely large AI models.