NVIDIA Tesla A100 80GB SXM4 GPU (Ampere Architecture, 80GB HBM2e, NVLink, MIG Support)

NVIDIA Tesla A100 80 GB SXM4 GPU - Ampere Architecture for AI & HPC | PNs: 699-2G506-0210-300, [future compatible part numbers]

High-Performance NVIDIA A100 SXM4 GPU for Data Centers, AI, and High-Performance Computing

The NVIDIA Tesla A100 80 GB SXM4 GPU (Part Numbers: 699-2G506-0210-300, and equivalent PNs in the A100 80GB SXM4 family) delivers breakthrough acceleration for AI, data analytics, and HPC workloads.

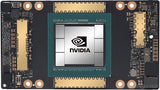

Built on the Ampere architecture (GA100 GPU), it features 80 GB of HBM2e memory, 2 TB/s bandwidth, and 7,000+ CUDA cores, setting the standard for data center performance.

Engineered for multi-GPU NVLink scaling, this A100 SXM4 GPU provides up to 600 GB/s interconnect bandwidth, enabling massive AI model training and scientific simulations with unmatched efficiency.

Whether you’re powering AI research, deep learning clusters, or HPC environments, the NVIDIA A100 SXM4 delivers consistent performance, scalability, and energy efficiency trusted by leading enterprises and research institutions.

✔️ In Stock & Ready to Ship – Tested & Certified

⚙️ Product Specifications: NVIDIA Tesla A100 80 GB SXM4 GPU

CLICK HERE FOR SPECIFICATIONS SHEET

| Specification | Details |

|---|---|

| Model / Part Numbers | 699-2G506-0210-300 (and equivalent A100 SXM4 80GB variants) |

| Architecture | NVIDIA Ampere (GA100 GPU) |

| CUDA Cores | 6,912 |

| Tensor Cores | 432 |

| GPU Memory | 80 GB HBM2e |

| Memory Bandwidth | 2,039 GB/s |

| FP64 Performance | 9.7 TFLOPS |

| FP32 Performance | 19.5 TFLOPS |

| TF32 (Tensor Float 32) | 156 TFLOPS |

| FP16 / BF16 Performance | 312 TFLOPS |

| INT8 Tensor Core | 1,248 TOPS |

| Form Factor | SXM4 module (for GPU-accelerated servers) |

| NVLink Bandwidth | Up to 600 GB/s |

| MIG Support | Up to 7 instances per GPU |

| TDP | 400 W |

| Cooling | Passive (server chassis airflow required) |

| Die Size / Transistors | 826 mm², ~54 B transistors |

| Certifications | NVLink, MIG, DGX/AEP compatible |

| Weight | Approx. 2 lb (heatsink), 11 lb full module |

| Condition | Used / Tested |

| Availability | In Stock – Ready to Ship |

❓ Frequently Asked Questions (FAQs)

Q1: Which workloads benefit most from the A100 SXM4 GPU?

A: Deep learning model training, AI inference, HPC simulations, and large-scale analytics all leverage the A100’s Ampere Tensor Core performance.

Q2: Are there differences between the various A100 SXM4 part numbers?

A: No - all equivalent PNs (e.g., 699-2G506-0210-300 and future variants) share identical specifications and performance. They mainly differ by regional, batch, or OEM labeling.

Q3: What’s the cooling requirement?

A: The SXM4 module uses passive cooling and requires robust server chassis airflow for proper thermal performance.

Q4: Does it support Multi-Instance GPU (MIG)?

A: Yes, up to seven isolated GPU instances per device for flexible workload partitioning.

Q5: Is this compatible with NVIDIA DGX systems?

A: Yes - it’s designed for DGX, AEP, and other enterprise GPU platforms using SXM4 sockets.